June 10, 2024

By JB Ferguson, head of Capstone’s Technology Policy Practice

The likelihood of OpenAI, Anthropic, or one of their competitors developing artificial general intelligence (AGI)— highly autonomous systems that outperform humans at most economically valuable work—within a decade is unlikely but also underestimated. However, whether or not that goal is reached, the policy and regulatory responses to developments on the way to AGI will have significant investment implications for companies and investors alike.

Exponential increases are difficult to reason about. In AI’s case, the rate of that increase is so much higher that our mental model accommodates that we must acknowledge the plausibility of AGI or something very close to it within a decade.

The policy and regulatory responses to developments on the way to AGI will have significant investment implications for companies and investors alike.

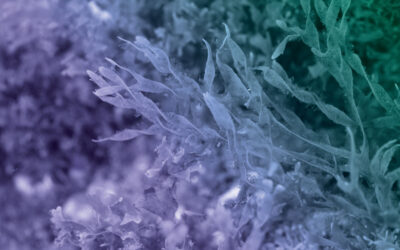

A recent essay by Leopold Aschenbrenner, who worked on the Superalignment team at OpenAI, lays out a credible argument that the exponential increases in raw compute capacity, model efficiency improvements, and removal of functionality barriers (“unhobbling”) will continue for the foreseeable future. In the essay’s model, large language models (LLMs) are improving at a rate of about 10x the gains experienced in semiconductor development (“Moore’s Law”). To put it in perspective, current advancement rates would result in “… a leading AI lab [being] able to train a GPT-4 level in a minute” by 2027. By comparison, GPT-4 took three months to train.

There are still potential roadblocks to continuation of current rates of LLM advancement, including the lack of additional training content for larger models, models’ lack of longer-term memory (behind the scenes, your entire conversation is re-submitted to the model every time you enter a new prompt), and models’ lack of understanding of mathematics. It is not clear that there are solutions to any of these, and a failure on any of them may cause LLM development to stall out onto the path that skeptics expect.

Acknowledging those risks, it is still worth considering the policy implications if AI sophistication continues compounding at its current radical rate. The plausibility of that notion suggests pulling the following policy issues forward from “science fiction” status to something more serious:

AI rights: Near-AGI systems are likely to be deemed legally conscious in some jurisdictions and afforded the same protections from abuse given to animals. Those systems (almost certain for AGI systems) may be afforded human rights. Complications will ensue.

Nationalization: At a high level, the grand plan of the OpenAI Foundation is to push towards AGI, with every model that is not AGI remaining in the foundation’s for-profit subsidiary. At AGI, the ownership of the model will be transferred to the foundation for the benefit of “all of humanity.”

The Biden executive order on artificial intelligence presages an interruption to that transfer in its use of the Defense Production Act to motivate the regulatory restrictions it puts on AI developers. It is difficult to imagine a scenario in which any nation state would allow true AGI to be shared with the public, and easy to believe that US national security law could prevent such a transfer.

AGI will have to manage its own prison break to become widely available.

The technological advances in AI and the ensuing regulatory responses are still in their early innings. At Capstone, we will be closely following the implications for companies and investors.

Regulation of cognitive hazards: Current LLMs are already used for marketing, and it is foreseeable that more advanced models will be capable of assisting mimetic engineering. One can argue that the effectiveness of optical illusions and the mimetic components of political, religious, and other social movements rely on fundamental errors of cognitive processing. Optical illusions are like old and well-known security vulnerabilities that have been patched in most systems. New terrorist cults, extending the metaphor, have found 0-day cognitive exploits and are deploying them. The rapid development of new cognitive 0-days will demand a state response, whether regulatory or simply kinetic. The technological advances in AI and the ensuing regulatory responses are still in their early innings. At Capstone, we will be closely following the implications for companies and investors.

JB Ferguson, head of Capstone’s Technology Policy Practice

Read more from JB:

AI Wars to Heat up: Why the US and EU Will Escalate Their AI Trade War, and Why it Carries Underappreciated Risks

Crypto’s Regulatory Moment: Why a Confluence of Regulatory Dynamics Will Spark the Asset Class

Governmental Hard Power is Finally Coming for AI, and is Already Losing