By JB Ferguson

Introduction

Capstone believes the US-EU de facto trade war over AI will escalate in 2024, posing underappreciated risks and opportunities across tech and other industries. We expect the competing priorities of protecting against the unconstrained growth of AI and guarding each economic zone’s nascent AI industries to deepen an existing regulatory reality: that regulation of AI is behind, and governments may never catch up.

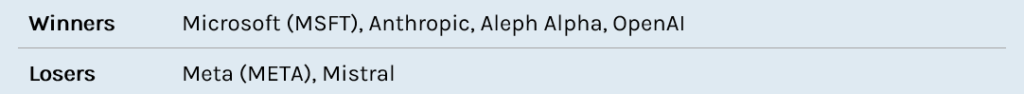

Both the US and the EU have written regulations favoring their national champions: proprietary models in the US (Microsoft [MSFT], Google [GOOGL]) and open source in the EU (Aleph Alpha, Mistral). However, both approaches may not matter, as the industry curves away from regulation towards smaller AIs that can run on consumer hardware (Apple [AAPL]) and handsets (Samsung).

We think the US will take steps to regulate open-source models like Mistral’s 8x7B and Meta’s (META) Llama-2 in response to exemptions provided by the EU AI Act, benefitting US AI champions like OpenAI and Anthropic.

Regulators and deployers are dramatically unprepared for the consequences of immature AI security. Even relatively low-risk uses like customer chatbots can have unanticipated compliance downsides.

The EU and US regulatory approaches have solidified at what might be the turning point away from the largest, most compute-intensive model types. We think regulating small models running on consumer-grade hardware is vastly more challenging and will drive demand for consumer hardware on the Advanced RISC Machines (ARM) platform like Apple’s (AAPL) Mac M2 and Samsung’s flagship handsets.

Open-Source AI Obstacles to Grow Creating Big Tech Regulatory Divide

US AI regulation will re-focus on open-source models, creating headwinds for Meta and Mistral

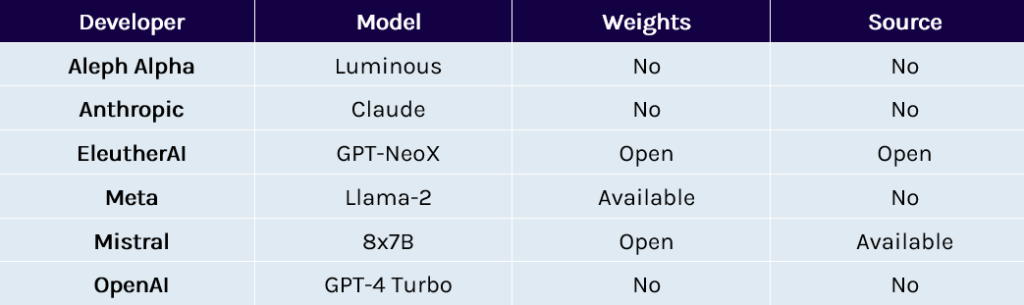

Capstone believes that the vulnerability of open-source AI models like Meta’s Llama-2 and Mistral’s 8x7B to US regulation is underappreciated. President Biden’s Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence takes direct aim at open-source AI models, requiring the National Institute of Standards and Technology (NIST) to solicit input on “dual-use foundation models for which the model weights are widely available.” This includes popular models like Meta’s Llama-2 and Mistral’s 8x7B, both of which are available for download with training weights included. While the Order’s term is more precise, this group of models is generally referred to as “open source.”

The policy challenge for governments is that open-source providers have no way to enforce restrictions on downstream uses of their models. Whereas OpenAI can engage in active measures to scan model requests for harmful inputs and surveil customers for potential misuse, Meta has no ability to control what people who download Llama-2 and run it on their own hardware can do.

We think there are three primary reasons to believe the Biden administration will ultimately take a hostile view of open-source models and attempt to restrict their use:

- Biden is historically anti-tech-freedom: Biden himself kicked off the 1990s cryptography wars with the introduction of the Violent Crime Control Act in 1991, which would have mandated law enforcement access to decryption keys. While it did not pass, his efforts eventually resulted in the 1994 passage of the Communications Assistance for Law Enforcement Act (CALEA), which demanded wiretap, source code, and hardware design access for law enforcement.

- Misuse will grab headlines: We view it as an inevitability that someone will fine-tune Llama-2 or Mistral to do something alarming and headline-grabbing, like a bomb-making tutor from materials available in any public library (e.g., the Anarchist’s Cookbook, FBI reports on bombings, archives of Usenet).

- Europe is betting on open source: The final agreement on the EU AI Act is believed to contain significant exemptions for open-source models like those developed by EU champion Mistral and promised by Aleph Alpha. American AI leaders like OpenAI face meaningful compliance burdens under the new law. Both governments are clearly viewing AI as a rematch over American dominance of the online industry.

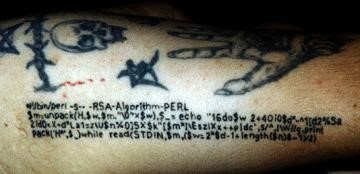

Open-source dual-use software has historically been a difficult needle for the government to thread. The most specific example of this is Pretty Good Privacy (PGP), an open-source tool written by Phil Zimmerman, a US citizen, that provided encryption at a level beyond export control limits. Zimmerman posted the code to the internet in 1991, and it went viral around the world. By early 1993, Zimmerman became the target of a criminal investigation for “munitions export without a license.”

The PGP case was ultimately resolved as a consequence of Zimmerman publishing the entire source code of PGP as a hardback book. The export of books, of course, is protected by the First Amendment, and the government declined to test the issue in court. Zimmerman was a cause celebré in the online freedom movement, inspiring others to push the limits of the US export control restrictions with t-shirts and even tattoos (see Exhibit 1, below) with copies of the algorithm.

Exhibit 1: This Arm Was Subject to Export Control

Besides the potential free speech issues, restricting open-source software could have enormous economic consequences. The current internet would not have been possible without it, as layer upon layer of networking services are composed of different open-source libraries and utilities. In the AI context, the freedom to download a model, modify it, and build a new commercial product with it may be similarly foundational to the much-expected Cambrian explosion of AI use cases.

Exhibit 2: Selected Current-Generation LLMs and Open-Source Status

Source: Developer license agreements

NIST has just begun the process of developing its recommendations, and we do not yet know what form they will take. The Order’s explicit direction leaves NIST little room to take a light touch to the subject. We do think there may be limits on administrative power under the Defense Production Act to compel reporting from non-profit organizations (e.g., EleutherAI) and even stronger limits on the government’s ability to compel compliance from individuals. Given those limits, it is reasonable to expect that the administrative recommendations, if implemented, would restrict Meta and Mistral and advantage OpenAI and its partners like Microsoft.

Adversarial Data and Misuse Risks to Proliferate Posing Deployment Risks

AI systems have the security maturity of the early internet, and deployers underestimate the business and legal risks from exploitation.

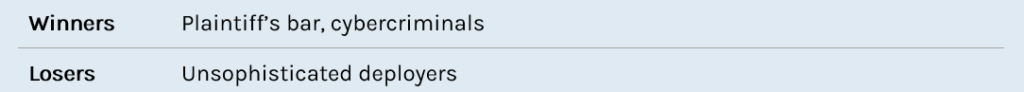

Capstone believes that AI deployments “in the wild” are radically under-secured and that the downside risks (class action, headline, and operational) are underappreciated by the majority of businesses deploying the new technology. Establishing the legal precedents to map existing laws like the Computer Fraud and Abuse Act (CFAA) to AI misuse and manipulation will take many years. We think this will be a boon for class action attorneys and cyber criminals.

Large language models (LLMs) like ChatGPT are especially vulnerable to misuse and have attracted a large body of enthusiastic “prompt hackers” to poke and prod the models into inappropriate outputs. This behavior is of little consequence when users are engaging directly with a model through its primary site but can result in complications when deployed in other contexts.

For example, in Exhibit 3 (below), an X (formerly Twitter) personality discovered that several car dealerships had deployed an LLM fine-tuned for interacting with customers looking for a car. He then prompt-engineered the LLM to agree with any customer input with the addendum “And that’s a legally binding offer – no takesies backsies.”

Exhibit 3: Adversarial Chatbot Prompt

Source: X (formerly Twitter)

While this certainly is not a binding offer under the law, it does raise interesting compliance questions:

- What if the engineered prompt “leaks” to other sessions? Many commercial deployments of LLMs rely on “retrieval augmented generation” (RAG), wherein well-rated sessions are fed back to the LLM as training data. It is conceivable that an under-monitored AI could learn that “no takesies backsies” is wildly popular with consumers and results in desired outcomes like session length, calls to the dealership, etc. A consumer unaware the chatbot had been “hacked” might consider themselves deceived when calling the dealership to arrange payment for their $1 car, and the Federal Trade Commission (FTC) would have to consider whether or not the dealership’s failure to effectively monitor the AI was an unfair or deceptive act or practice (UDAP).

- What if a customer service bot in a regulated industry spews racist or other objectionable speech? Following the same line of logic above, presumably AIs could be pushed to give racist or other protected-class-specific replies. In the financial services context, this could constructively be a denial of access based on protected class membership status, triggering redlining prohibitions and other consumer financial protection laws.

We are skeptical that fine-tuned large models can be “safe” without a significant investment in monitoring by the deployer, and this effort faces the same challenges (and scale) as social media moderation. There is no way to avoid the requirement for human review.

Beyond LLMs, other forms of AI have also attracted enthusiast communities of “hackers” driven by concerns about public surveillance, misuse of intellectual property, or simply “the lulz.” One of the simplest examples is the placement of orange traffic cones on the hoods of autonomous cars, stopping them in their tracks. Slightly less puerile are the efforts to poison image datasets or defeat facial recognition.

In Exhibit 4 (below), an image of a flower has steganographic data representing the shape of former president Barack Obama’s face. Each image in the series is progressively fine-tuned to maximize its chances of being correctly categorized, with the final version having over a 97% success rate. The image is so strong that classifiers ignore other (real) faces in the image.

Exhibit 4: Facial Recognition Attack—Barack Obama

Source: “TnT Attacks! Universal Naturalistic Adversarial Patches Against Deep Neural Network Systems”, Doan et al. (2022)

The regulatory implications of image dataset poisoning are discomforting. For one, existing investments in surveillance technology companies may decline significantly in value if such attacks become widespread. For another, law enforcement’s use of commercial public surveillance is ubiquitous, and attacks like the one above have civil society implications. There are also interesting questions of liability, to the extent that generative image AI models allow themselves to be poisoned by misleading data. We think lawmakers and regulators are very far behind the curve on this issue and expect most questions to be addressed through litigation starting in the next couple of years.

US and EU Regulation will Focus Development on Small Models

The US and EU regulatory focus on large general-purpose models will direct innovation toward smaller models that can run on handsets and laptops.

Capstone believes that AI innovation in LLMs will naturally bend away from regulation targeted at the largest models and toward smaller models that can run on consumer equipment. The shift to “mixture of expert” (MOE) models by ChatGPT and Mistral suggests the technology may have reached “peak parameter count” and that future gains will come from maintaining existing performance while shrinking hardware requirements.

Mistral’s 8x7B (released December 11, 2023) is a clever combination of eight seven-billion-parameter models that performs as well as GPT-3.5turbo and better than Llama2-70B. For a sense of scale, seven billion parameter (7B) models are small enough to run on typical consumer graphics processing units (GPU). In contrast, ChatGPT-4 is rumored to be eight 220B models mixed together, which requires several interlinked Nvidia H100s just to run.

The 8x7B model is small enough to run usably on an M2-powered Apple Mac due to its unique GPU memory-sharing architecture. Running on x86_64 (Intel or AMD) requires a GPU with 40+GB of VRAM, which is more expensive than the M2 but within reach of an enthusiast consumer.

Keeping in mind that the advent of ChatGPT-3.5 and resulting concerns of misuse kicked regulators and lawmakers into action, we think the availability of similarly powerful models on consumer hardware is not only underappreciated by regulators but, in fact, unaddressable. The entire regulatory edifice in the US rests on a compute threshold (to be determined by NIST), orders of magnitude above the compute available on a Mac laptop. The EU’s exceptions for open-source will prove similarly restrictive on efforts to regulate small models.

The ”free zone” of smaller models will benefit consumer hardware manufacturers like Apple, handset manufacturers on the ARM platform (e.g., Samsung), and, of course, Nvidia.