California Data Breach Lawsuits, Section 230 Decisions, Privacy Laws to Create Headwind for Meta, Amazon, Google

December 27, 2022

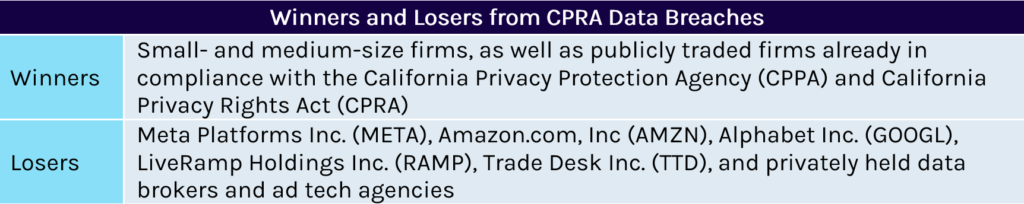

Capstone believes many investors are underappreciating the likely enforcement ramp-up by the California Privacy Protection Agency (CPPA) as the California Privacy Rights Act’s (CPRA) provisions take effect on January 1, 2023. The CPRA includes provisions for an expansive private right of action in the event of a data breach and significant civil penalties. As a result of the statutory minimums for sizing fines, we expect Big Tech platforms that violate the CPRA will have to agree to higher settlements.

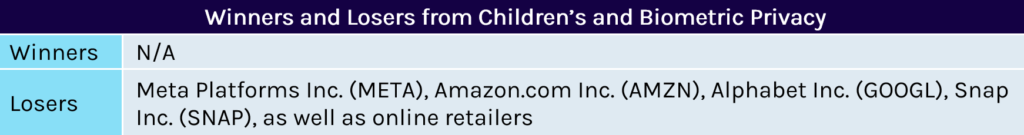

We expect the momentum for focused privacy laws in areas such as biometrics and children’s privacy, as well as regulatory action by the Federal Trade Commission (FTC), to continue in 2023. These two subcategories have attracted substantial consensus, and bipartisan support as lawmakers feel they can protect consumers without delving into the core disagreements involved with broader privacy rights.

The Kids Online Safety Act (KOSA) initially was included in the federal omnibus spending package and later removed. However, we expect that providing additional protections for young users online will be a greater priority for Congress in the coming year.

We expect the momentum for focused privacy laws in areas such as biometrics and children’s privacy, as well as regulatory action by the FTC, to continue in 2023.

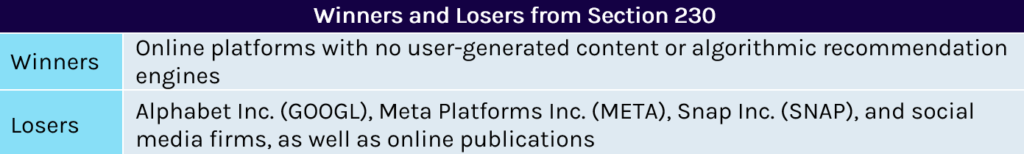

In addition to more stringent privacy laws, we anticipate that online platforms also will face growing headwinds to their content moderation activities as the legality of Section 230 of the Communications Act in 2023 is adjudicated by federal courts. The decisions in NetChoice v. Paxton and Gonzalez v. Google are likely to have sweeping implications on how the internet is regulated, regardless of how the US Supreme Court rules. Should the high court side with any of the parties challenging Section 230, we expect a wave of subsequent privacy litigation to attempt to establish liability for content on their online platforms.

A Deeper Look

Data Breaches to Generate Greater Fines Under CPRA

On January 1, 2023, the California Privacy Rights Act will fold into the California Consumer Privacy Act (CCPA) and become enforceable. While the CCPA already includes provisions for Private Rights of Action (PRA)—a mechanism that allows private citizens or consumers to bring complaints against alleged violations—the CPRA broadly expands on the PRA to include data breaches. In a class action that is fully litigated, the CPRA calls for penalties of $2,500 for basic violations and $7,500 for willful violations per class participant. While we believe many of the legal challenges brought will not reach that stage, we expect that settlements will result in fines of $250 to $750 per class action participant, a materially higher figure than today’s average settlement range of $13 to $90 (where credit card and financial information is not included in the incident). Furthermore, under the CPRA, the 30-day right-to-cure period for resolving claims will be removed, which we believe will significantly increase the probability that regulatory enforcement action and settlements for privacy violations will be reached.

We believe the enforcement action is indicative of strong enforcement headwinds to come in the next year and lays the foundation for higher amounts in the future by establishing industry knowledge.

The first major privacy settlement under the CCPA was reached in September 2022 against LVMH (LVMUY on the over-the-counter market) subsidiary Sephora for failing to adhere to Global Privacy Control (GPC), a new protocol that automatically signals to websites a user’s designated privacy preferences. While the $1.2 million settlement was immaterial to LVMH, we believe the enforcement action is indicative of strong enforcement headwinds to come in the next year and lays the foundation for higher amounts in the future by establishing industry knowledge. Furthermore, California Attorney General Rob Bonta (D) warned “there were no more excuses” for companies to not follow GPC, opt-out signals, or the broader privacy laws.

The CPPA is undergoing its rulemaking process to determine the exact scope of the CPRA through implementing regulations. With an annual budget of roughly $10 million, the CPPA will have the resources to take an aggressive enforcement posture and effectively hold Big Tech firms accountable for privacy violations. In a preview of its positions, the CPPA has largely ignored concerns from industry representatives in its most recent CPRA update. However, the agency is still dealing with staffing issues, which we do not expect to be resolved until mid-to-late 2023. The delays in finalizing its initial responsibilities could slow the pace of enforcement in the first few months of 2023.

We believe during H2 2023, the initial period when the agency can bring enforcement action, only the most egregious violations will be pursued.

We believe during H2 2023, the initial period when the agency can bring enforcement action, only the most egregious violations will be pursued. However, it is likely the CPPA and AG Bonta will quickly turn their sights on Big Tech firms that are not CCPA-compliant by early 2024, and the continued uncertainty will likely raise compliance costs in the final few months. It is unlikely that small- and medium-size platforms will be targeted in 2023. Despite the CPPA’s need to establish authority, we do not believe it will have the capacity to enforce low-impact violations. Additionally, it is likely that determining what full compliance entails will be discovered by litigation throughout the first few years of enforcement.

Consensus at Federal and State Levels to Legislate on Biometric and Children’s Privacy

Children’s Privacy

In September 2022, Governor Gavin Newsom (D) signed into law the California Age-Appropriate Design Act (A.B. 2273). The bill compels online platforms that are likely to be accessed by children to provide greater protections for users younger than 18. For example, a user who is assumed to be a minor must have the highest level of privacy settings set on by default, such as blocking precise geolocation data from being shared, and a complete ban on the use of dark patterns. In the next year, we expect similar legislation to be introduced by Democratic states that failed to pass a comprehensive bill in 2022, including New York and Washington state.

We expect that online platforms that have a large share of users younger than 18, including Snapchat and Meta’s Instagram, will experience challenges in complying with A.B. 2273. Due to the popularity of these two platforms, we believe it will be difficult to correctly identify the specific users who are covered by this law, increasing the probability of large civil penalties. A.B. 2273 has a maximum penalty of $2,500 for negligent violations and $7,500 for intentional violations. It is likely that in a class action, penalties would be comparable to data breach fines under the CPRA.

However, A.B. 2273 does not include a PRA and is enforceable by the state attorney general, decreasing the probability of enforcement in 2023. Additionally, NetChoice Corp. sued California for passing A.B. 2273, alleging that it violates the First Amendment rights of online platforms. However, in conversations with stakeholders, we believe AG Bonta’s office likely anticipated the lawsuit. Furthermore, we do not believe the lawsuit will be successful.

We expect that online platforms that have a large share of users younger than 18, including Snapchat and Meta’s Instagram, will experience challenges in complying with A.B. 2273.

However, A.B. 2273 does not include a PRA and is enforceable by the state attorney general, decreasing the probability of enforcement in 2023. Additionally, NetChoice Corp. sued California for passing A.B. 2273, alleging that it violates the First Amendment rights of online platforms. However, in conversations with stakeholders, we believe AG Bonta’s office likely anticipated the lawsuit. Furthermore, we do not believe the lawsuit will be successful. At the federal level, Congress and consumer protection regulators also are poised to revisit children’s privacy issues in 2023. We believe the initial inclusion of the KOSA in the year-end omnibus package indicates that children’s privacy will be an underappreciated priority in the next Congress. KOSA attempts to address the dangers of social media on young children and would require online platforms to provide additional safeguards for children who use their platform. For example, platforms would have to remove “addictive” features, establish a privacy by design interface, and give children and their parents additional autonomy over how their data are collected and used. However, progressive privacy advocates largely do not support KOSA as they believe it leaves too much discretion to the platform to determine what features should be included.

The FTC recently reached an agreement with privately owned Epic Games for violating the Children’s Online Privacy Protection Act (COPPA). Commissioners voted unanimously to issue the complaint. In total, the company must pay $520 million in damages to affected consumers, a record-breaking penalty for violations of COPPA. The FTC pursued the company’s use of manipulative dark patterns to nudge children to make additional purchases in their catalog of games. We believe the settlement will allow the FTC to build on a narrower interpretation of the statute and the market underappreciates the potential for additional enforcement actions under COPPA given the clear and shifting FTC priorities to protect kids online.

Biometric Privacy

We expect that states will continue to introduce and pass biometric privacy laws in 2023, especially given the increased appetite seen following the successful $650 million settlement with Meta Platforms Inc. (META) for violation the Illinois Biometric Information Privacy Act (BIPA). Texas AG Ken Paxton (R) sued Meta in February 2022, based on that was made in Illinois using the state’s equivalent statute, which we believe will result in a settlement in the hundreds of millions of dollars. While the market largely focuses on lawsuits filed under the Illinois BIPA, we expect equally stringent laws to be introduced in 2023, especially in Democratic trifecta states, such as New York and Washington.

We expect that states will continue to introduce and pass biometric privacy laws in 2023…

Beyond Big Tech firms, we will continue to evaluate the rising levels of biometric privacy lawsuits against online consumer retail firms, such as Target Corp. (TGT) and Walmart Inc. (WMT). Online retailers have continued to be sued for offering services that allow customers to “try on” products prior to buying them. These services typically require a user to provide facial recognition data. While we do not believe “try on” services are inherently a violation of BIPA laws, defendants have not been able to successfully dismiss these allegations thus far.

Attempts to Narrow Section 230 Intensifies in the Courts

NetChoice v. Paxton

Due to split court decisions in the US Courts of Appeals for the Fifth and 11th circuits, we expect the Supreme Court will grant NetChoice’s petition for certiorari for review filed in December and take this case in the upcoming year. Texas’ H.B. 20 and Florida’ S.B. 7072 effectively carry the same effects for online platforms. If either bill is eventually ruled constitutional, online platforms such as Meta would lose their editorial discretion for moderating what users post. H.B. 20 would treat online platforms with more than 50 million users as common carriers, preventing them from “censoring” any user for any political view.

We expect the Supreme Court will grant NetChoice’s petition for certiorari for review filed in December and take this case in the upcoming year.

The Supreme Court provided an early preview into how its interest in these issues and how it could proceed. In May 2022, NetChoice filed an emergency application with the Supreme Court to place a stay on the initial decision from the Fifth Circuit. The emergency application was presented to Justice Samuel Alito at first per court procedures for these types of applications. Due to the heavy consequences of any change to Section 230, Justice Alito referred the application for the full court’s review. Ultimately, a stay was granted, and the application was passed back down to the lower court.

Should the Supreme Court side with AG Paxton and rule H.B. 20 constitutional, then the protections that Section 230 affords online platforms would largely be stripped. In a worst-case scenario, we believe Meta will likely cut access to the platform for users in certain states. We estimate that this step could cost the company roughly $530 million in quarterly revenue. Additionally, it is likely that other Republican trifecta states would pursue similar measures.

Gonzalez v. Google

Following the 2015 Paris attacks, the Gonzalez family sued Google for allegedly promoting videos on its video-streaming platform YouTube that aided in the recruitment of terrorists. Specifically, the family argues that recommended videos that are pushed from an internally developed algorithm are not protected under Section 230 as that is not a traditional editorial decision that shields the platform from liability.