By David Barrosse, CEO

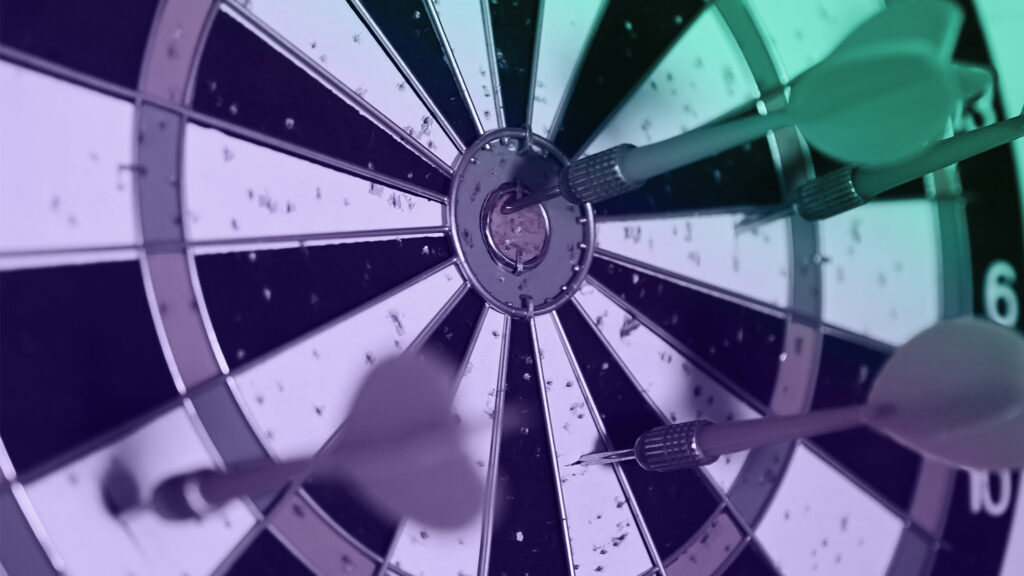

August 17, 2021 — Fundamentally, we do two things at Capstone. We make policy predictions, and then we model how those predictions will impact companies or sectors. However, the prediction business has a very poor reputation, especially in the world of politics and policy.

The reality is individuals in our industry (and business in general) are notoriously inept at judging the likelihood of uncertain events. And getting judgments wrong, of course, can have serious consequences. Policy and regulatory impacts on companies and broader industries are especially complex, poorly understood, and tough to quantify. That’s complicated even further by the fact that predictions are colored by the forecaster’s susceptibility to pernicious cognitive biases, concerns about reputation, perceptions about what may make them stick out from the crowd, and little genuine self-reflection.

The word “pundit” is mostly used to describe TV talking heads who are hopeless at political prediction. Worse, many pundits will claim victory no matter the outcome, thereby making it impossible to be wrong. It’s no different in the investment analysis world, where predictions are often intentionally vague to maximize wiggle room should they prove wrong, or broad enough not to really matter.

When I founded Capstone eleven years ago, I was convinced we could create processes that would lead to accurate predictions. In the early days of the firm, we made it a habit to ensure we were getting balanced inputs – exploring both sides of the issue, reading deeper, and speaking to a wider range of smart voices. However, beyond that simple philosophy, we really struggled with more sophisticated ways to improve our accuracy. Then, five years ago, a cognitive psychologist named Philip Tetlock wrote a book called Superforecasting: The Art and Science of Predicting. When I first saw the title, I was skeptical. I had seen many business books that made bold promises backed up by underwhelming advice. However, reading the book changed my mind and eventually changed our entire research process.

The book Superforecasting is an analysis of a multi-year study pitting ordinary folks against the U.S. intelligence community. The study results showed that a group of people, later dubbed superforecasters, were able to significantly beat the predictive power of the intelligence community, despite not having access to classified material. The book studied what the superforecasters had in common. What it found was the value of a community of smart, open-minded people using a rigorous, systematic, and transparent approach to prediction that combines statistical analysis and intentional efforts to remove cognitive bias from their views. They weren’t afraid to change their mind whenever the facts changed, they leveraged effective teams to utilize the wisdom of the crowd, and they obsessed over every prediction—why it went right or why it didn’t—never letting themselves off the hook.

Early in 2020, Capstone teamed up with the Good Judgment Inc. (GJ), an organization affiliated with Tetlock and made up of the original superforecasters. GJ helped us design an even more rigorous process to improve our predictive accuracy further. In addition, we jointly designed a series of multi-day mandatory training sessions for all of our analysts. This led to the adoption of superforecasting techniques across all of our research and a more rigorous measuring of all our predictions.

The most visible change in our research has been more concrete predictions, banning squishy words such as “likely.” We’ve worked to sharpen our forecasts to include a precise definition of the prediction, the time frame involved, and a numeric probability. We don’t want any wiggle room. The importance of a falsifiable prediction cannot be overstated. We have to be in a position to be wrong. A “heads I win, tails you lose” prediction has no credibility with people who must commit capital for a living.

Committing to making predictions that don’t let us off the hook means also committing to a more complex research process. When we make predictions—such as saying there is a 75% probability that Congress will expand electric vehicle tax credits in 2021, that we assign 80% odds that the FCC reinstates net neutrality by the end of 2022, or that there is only a 15% chance that a Booker-Wyden-Schumer-like bill, friendly to the country’s budding legal marijuana industry will pass Congress—readers should know that these predictions are the culmination of rigorous and overlapping processes. These include taking great care to consider what has happened in the past (base rates), and layering on research, data, and conversations with relevant voices to help us understand if there are any reasons things will be different now. It includes conducting a premortem to seriously examine where and how we could be wrong, and what the odds of that are. It means measuring and tracking the validity of our predictions over time using Brier scoring and conducting data analysis on why we got certain things right, and why we got other things wrong.

More importantly, when facts change, we update our probability. This may lead to strange numbers (83% chance of this or 13% chance of that) in our research that might make some clients skeptical of our supposed precision. However, one of the key findings in Tetlock’s research is that frequent updating, in small increments, of an original prediction dramatically improves accuracy. When our analysts publish updates, we challenge them to consider how new information should change the original prediction and urge them to make slight adjustments.

There are myriad unseen processes that go into each piece of research you read. Ultimately the process means better predictions, and more value for clients. We aren’t afraid to show our work.

After Iran’s Strike: What Happens Next

April 15, 2024 By Daniel Silverberg and Elena McGovern, co-heads of Capstone’s National Security Practice As crazy as it seems, a favorable scenario between Iran and Israel appears to be playing out amidst red-hot tensions in the Middle East. Iran was expected to...